Chapter 4 Data Structures in R

This chapter delves into the following data structures and their associated R functions:

- Scalar: A singular data point, such as a string or a number.

- Vector: A one-dimensional array that contains elements of the same type.

- Matrix (

matrix): A two-dimensional array that contains elements of the same type. - List (

list): A one-dimensional array capable of storing various data types. - Data Frame (

data.frame): A two-dimensional array that can accommodate columns of different types. - Tibble (

tbl_df): Introduced by thetibblepackage (Müller and Wickham 2023), a tibble is a modern take on the data frame. As a component of thetidyverse(Wickham 2023c), tibbles offer improved features for better usability. - Data Table (

data.table): A high-performance extension of data frames by thedata.tablepackage (Barrett et al. 2024), crafted for efficient operations on large datasets. - Extensible Time Series (

xts): A specialized data frame offered by thextspackage (Ryan and Ulrich 2024b), designed explicitly for time series data.

Understanding the data structure of variables is crucial because it determines the operations and functions that can be applied to them.

4.1 Scalar

Scalars in R are variables holding single objects, such as a number, a string, a logical value, or a date.

# Numeric (a.k.a. Double)

w <- 5.5 # w is a decimal number.

class(w) # Returns "numeric".

# Integer

x <- 10L # The L tells R to store x as an integer instead of a decimal number.

class(x) # Returns "integer".

# Complex

u <- 3 + 4i # u is a complex number, where 3 is real and 4 is imaginary.

class(u) # Returns "complex".

# Character

y <- "Hello, World!" # y is a character string.

class(y) # Returns "character".

# Logical

z <- TRUE # z is a logical value.

class(z) # Returns "logical".

# Date

z <- as.Date("2022-08-12") # z is a Date value.

class(z) # Returns "Date".

# Time

z <- as.POSIXct("2022-08-12 22:30:12", tz = "America/Chicago") # z is a time value.

class(z) # Returns "POSIXct".## [1] "numeric"

## [1] "integer"

## [1] "complex"

## [1] "character"

## [1] "logical"

## [1] "Date"

## [1] "POSIXct" "POSIXt"Further discussions on functions pertaining to these data types can be found in Chapters 3.2 and 3.3.

4.2 Vector

In R, a vector is a homogeneous sequence of elements, meaning they must all be of the same data type. As such, a vector can hold multiple numbers, but it cannot mix types, such as having both numbers and words. The function c() (for combine) can be used to create a vector.

# Numeric vector

numeric_vector <- c(5, 2, 3, 4, 1)

class(numeric_vector) # Returns "numeric".

# Character vector

character_vector <- c("Hello", "World", "!")

class(character_vector) # Returns "character".

# Logical vector

logical_vector <- c(TRUE, FALSE, TRUE)

class(logical_vector) # Returns "logical".

# Date vector

date_vector <- as.Date(c("2022-08-12", "2022-08-30", "2022-09-03"))

class(date_vector) # Returns "Date".

# Unordered factor

unordered_factor <- factor(x = c("male", "male", "female", "male", "female"),

levels = c("male", "female", "other"),

ordered = FALSE)

class(unordered_factor) # Returns "factor".

# Ordered factor

ordered_factor <- factor(x = c("L", "L", "H", "L", "M", "H", "M", "M", "H"),

levels = c("L", "M", "H"),

ordered = TRUE)

class(ordered_factor) # Returns "ordered" "factor".## [1] "numeric"

## [1] "character"

## [1] "logical"

## [1] "Date"

## [1] "factor"

## [1] "ordered" "factor"In R, the class() function labels both scalars and vectors by their data type, not by their dimension. Consequently, a single integer and a vector of integers are both labeled as “integer”. This perspective aligns with the idea that a scalar is essentially a vector with only one element.

For an overview of vector operations, see Chapters 3.2.7 and 3.2.8.

4.3 Matrix (matrix)

A matrix in R is a two-dimensional array comprising both rows and columns. Every element within the matrix must belong to the same data type, be it numeric, character, or otherwise.

4.3.1 Create a Matrix

Matrices can be formed using the matrix() function, and its important inputs include:

data: The elements that constitute the matrix.nrowandncol: Specify the number of rows and columns, respectively.byrow: A logical value. If set toTRUE, the matrix is filled by rows. IfFALSE(the default), it’s filled by columns.

# Create a 3x3 numeric matrix, column-wise (default behavior)

numeric_matrix <- matrix(data = 1:9, ncol = 3)

print(numeric_matrix)## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 2 5 8

## [3,] 3 6 9# Create a 2x3 character matrix, row-wise

character_matrix <- matrix(data = letters[1:6], ncol = 3, byrow = TRUE)

print(character_matrix)## [,1] [,2] [,3]

## [1,] "a" "b" "c"

## [2,] "d" "e" "f"4.3.2 Inspect a Matrix

To gain an understanding of the type and structure of a matrix, utilize the following functions:

# Investigate numeric matrix

class(numeric_matrix) # Outputs "matrix".

is.matrix(numeric_matrix) # Outputs TRUE.

typeof(numeric_matrix) # Outputs "integer".## [1] "matrix" "array"

## [1] TRUE

## [1] "integer"# Investigate character matrix

class(character_matrix) # Outputs "matrix".

is.matrix(character_matrix) # Outputs TRUE.

typeof(character_matrix) # Outputs "character".## [1] "matrix" "array"

## [1] TRUE

## [1] "character"To ascertain the dimensions of a matrix, one can employ the nrow(), ncol(), and dim() functions:

## [1] 2## [1] 3## [1] 2 3The head() and tail() functions retrieve the initial and final n rows of a matrix, respectively, with a default value of n = 6. These functions are handy when the matrix has a substantial number of rows, making it difficult to display the entire matrix in the R console.

## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 2 5 8## [,1] [,2] [,3]

## [2,] "d" "e" "f"If a matrix has a large number of columns, making it challenging to display even with head() or tail(), RStudio offers the View() function, which presents the matrix in a spreadsheet format. Note that View() is specific to RStudio and isn’t part of base R.

Moreover, the summary() function delivers a collection of statistical measures for every column in a numeric matrix. This function is invaluable as it offers insights that might not be immediately evident from looking at the data.

## V1 V2 V3

## Min. :1.0 Min. :4.0 Min. :7.0

## 1st Qu.:1.5 1st Qu.:4.5 1st Qu.:7.5

## Median :2.0 Median :5.0 Median :8.0

## Mean :2.0 Mean :5.0 Mean :8.0

## 3rd Qu.:2.5 3rd Qu.:5.5 3rd Qu.:8.5

## Max. :3.0 Max. :6.0 Max. :9.04.3.3 Select and Modify

You can select and modify specific elements, rows, or columns using indexing:

## [1] "d"## [1] "d" "e" "f"## [,1] [,2] [,3]

## [1,] "d" "e" "f"## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 99 5 8

## [3,] 3 6 94.3.4 Add Labels

For better clarity, rows and columns can be named:

# Constructing a matrix with labeled rows and columns

labeled_matrix <- matrix(data = 1:4, ncol = 2,

dimnames = list(c("Row1", "Row2"),

c("Col1", "Col2")))

print(labeled_matrix)## Col1 Col2

## Row1 1 3

## Row2 2 4## [1] "Col1" "Col2"## Col1 Col2

## First_Row 1 3

## Second_Row 2 44.3.5 Handle Missing Values

Matrices in R can also contain missing values, represented by NA:

# Matrix with NA values

mat_with_na <- matrix(data = c(1, 2, NA, 4, 5, 6, 7, 8, 9), nrow = 3)

print(mat_with_na)## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 2 5 8

## [3,] NA 6 9## [,1] [,2] [,3]

## [1,] FALSE FALSE FALSE

## [2,] FALSE FALSE FALSE

## [3,] TRUE FALSE FALSE# Replace NA with a specified value

mat_replaced_na <- mat_with_na

mat_replaced_na[is.na(mat_replaced_na)] <- 0

# Remove entire rows with NA values

cleaned_mat <- na.omit(mat_with_na)

print(cleaned_mat)## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 2 5 8

## attr(,"na.action")

## [1] 3

## attr(,"class")

## [1] "omit"4.3.6 Element-Wise Operations

In R, matrices support a variety of operations that act on their elements. When you use typical arithmetic operators like *, +, -, and / with matrices, they operate element-wise. This means that the operation is applied to each corresponding pair of elements from the two matrices.

## [,1] [,2]

## [1,] 1 3

## [2,] 2 4## [,1] [,2]

## [1,] 5 7

## [2,] 6 8## [,1] [,2]

## [1,] 5 21

## [2,] 12 32## [,1] [,2]

## [1,] 6 10

## [2,] 8 12Note: For element-wise operations, the matrices should have the same dimensions. If they differ, R will attempt to recycle values, which can result in unexpected behavior.

4.3.7 Linear Algebra Operations

Beyond element-wise operations, R provides a variety of functions and operators to perform linear algebra operations on matrices.

Transpose

t():

The transpose of a matrix \(A\), denoted as \(A^{\prime}\), is obtained by flipping it over its diagonal. This interchanges its rows and columns.## [,1] [,2] [,3] ## [1,] 1 3 5 ## [2,] 2 4 6## [,1] [,2] ## [1,] 1 2 ## [2,] 3 4 ## [3,] 5 6Matrix Multiplication

%*%: Matrix multiplication, unlike element-wise multiplication*, uses the%*%operator. It performs the standard matrix multiplication operation, where each element of the resulting matrix is the sum of the products of elements from the corresponding rows of the first matrix (A) and the corresponding columns of the second matrix (B).For example, if \(A = \begin{bmatrix} a & b \\ c & d \end{bmatrix}\) and \(B = \begin{bmatrix} w & x \\ y & z \end{bmatrix}\), then the result of element-wise multiplication

A * Bwill be: \(A \odot B = \begin{bmatrix} a \cdot w & b \cdot x \\ c \cdot y & d \cdot z \end{bmatrix}\), whereas the result of matrix multiplicationA %*% Bwill be: \(A B = \begin{bmatrix} (a \cdot w + b \cdot y) & (a \cdot x + b \cdot z) \\ (c \cdot w + d \cdot y) & (c \cdot x + d \cdot z) \end{bmatrix}\).(A <- matrix(1:4, nrow = 2)) # Matrix A (B <- matrix(5:8, nrow = 2)) # Matrix B A * B # Element-wise multiplication A %*% B # Matrix multiplication AB## [,1] [,2] ## [1,] 1 3 ## [2,] 2 4 ## [,1] [,2] ## [1,] 5 7 ## [2,] 6 8 ## [,1] [,2] ## [1,] 5 21 ## [2,] 12 32 ## [,1] [,2] ## [1,] 23 31 ## [2,] 34 46Crossproduct

crossprod(A, B): Computes the matrix product \(A'B\), where \(A'\) is the transpose of matrix \(A\). It’s essentially a faster version of computing the transpose of \(A\) followed by the regular matrix multiplication with \(B\).## [,1] [,2] ## [1,] 17 23 ## [2,] 39 53Kronecker Product

kronecker(A, B): The Kronecker product, often denoted by \(\otimes\), is an operation that takes two matrices and produces a block matrix. In R, it’s computed using the functionkronecker().For two matrices \(A\) and \(B\), the Kronecker product \(A \otimes B\) will have the matrix \(A\) scaled by each element of matrix \(B\).

For example, if \(A = \begin{bmatrix} a & b \\ c & d \end{bmatrix}\) and \(B = \begin{bmatrix} w & x \\ y & z \end{bmatrix}\), then \(A \otimes B = \begin{bmatrix} a \cdot w & a \cdot x & b \cdot w & b \cdot x \\ a \cdot y & a \cdot z & b \cdot y & b \cdot z \\ c \cdot w & c \cdot x & d \cdot w & d \cdot x \\ c \cdot y & c \cdot z & d \cdot y & d \cdot z \end{bmatrix}\).

## [,1] [,2] [,3] [,4] ## [1,] 5 7 15 21 ## [2,] 6 8 18 24 ## [3,] 10 14 20 28 ## [4,] 12 16 24 32Vec Operator: The vec operator is used to stack the columns of a matrix on top of each other, creating a single column vector. In R, you can achieve this by using the

c()function or theas.vector()function to concatenate the columns of a matrix into a single vector, and then using thematrix()function to specify that the resulting vector is a column vector.For example, if \(A = \begin{bmatrix} a & b \\ c & d \end{bmatrix}\), then \(\text{vec}(A) = \begin{bmatrix} a \\ c \\ b \\ d \end{bmatrix}\).

## [,1] ## [1,] 1 ## [2,] 2 ## [3,] 3 ## [4,] 4In this example,

vec(A)stacks the columns ofmatrix_Aon top of each other to create a single column vector.Matrix Inversion

solve():

The inverse of a matrix \(A\) (if it exists), denoted as \(A^{-1}\), is a matrix \(B\) such that the product of \(A\) and \(B\) (in that order) is the identity matrix: \(AB=I\). Usesolve()to compute the inverse.## [,1] [,2] ## [1,] -2 1.5 ## [2,] 1 -0.5Solving Linear Systems with

solve(A, B):

If you have a matrix equation of the form \(A X = B\), you can solve for matrix \(X\) usingsolve(A, B). This approach is more efficient than computingsolve(B) %*% A.## [,1] [,2] ## [1,] -1 -2 ## [2,] 2 3Matrix Exponentiation:

Raising a matrix to a power (\(n\)) is not as straightforward as using the^operator. The%^%operator from theexpmpackage (Maechler, Dutang, and Goulet 2024) facilitates matrix exponentiation in accordance with linear algebra rules.## [,1] [,2] ## [1,] 1 9 ## [2,] 4 16## [,1] [,2] ## [1,] 7 15 ## [2,] 10 22## [,1] [,2] ## [1,] 37 81 ## [2,] 54 118Eigenvalues and Eigenvectors

eigen():

Theeigen()function is used to compute the eigenvalues and eigenvectors of a matrix.## eigen() decomposition ## $values ## [1] 5.3722813 -0.3722813 ## ## $vectors ## [,1] [,2] ## [1,] -0.5657675 -0.9093767 ## [2,] -0.8245648 0.4159736Determinant

det():

You can compute the determinant of a matrix using thedet()function.## [1] -2Matrix Rank

qr():

The rank of a matrix, which is the dimension of the column space, can be determined using theqr()function.## [1] 2Singular Value Decomposition

svd(): Computes the singular value decomposition of a matrix.## [,1] [,2] ## [1,] -0.5760484 -0.8174156 ## [2,] -0.8174156 0.5760484## [1] 5.4649857 0.3659662## [,1] [,2] ## [1,] -0.4045536 0.9145143 ## [2,] -0.9145143 -0.4045536Cholesky Decomposition

chol(): Performs the Cholesky decomposition on a positive-definite square matrix.## [,1] [,2] ## [1,] 4 2 ## [2,] 2 3## [,1] [,2] ## [1,] 2 1.000000 ## [2,] 0 1.414214LU Decomposition

Matrix::lu(): Thelu()function from theMatrixpackage (Bates, Maechler, and Jagan 2024) decomposes a matrix into a product of a lower triangular and an upper triangular matrix.## LU factorization of Formal class 'denseLU' [package "Matrix"] with 4 slots ## ..@ x : num [1:4] 2 0.5 4 1 ## ..@ perm : int [1:2] 2 2 ## ..@ Dim : int [1:2] 2 2 ## ..@ Dimnames:List of 2 ## .. ..$ : NULL ## .. ..$ : NULLQR Decomposition

qr(): Decomposes a matrix into a product of an orthogonal and a triangular matrix.## $qr ## [,1] [,2] ## [1,] -2.2360680 -4.9193496 ## [2,] 0.8944272 -0.8944272 ## ## $rank ## [1] 2 ## ## $qraux ## [1] 1.4472136 0.8944272 ## ## $pivot ## [1] 1 2 ## ## attr(,"class") ## [1] "qr"Condition Number

kappa(): Estimates the condition number of a matrix, which provides insight into the stability of matrix computations.## [1] 18.77778Matrix Norm

Matrix::norm(): Thenorm()function from theMatrixpackage (Bates, Maechler, and Jagan 2024) computes various matrix norms.## [1] 5.477226

Remember to always ensure that the matrices you’re using with these functions meet the necessary preconditions (e.g., being square, positive-definite, etc.) required for each operation.

4.3.8 Combine Matrices

Matrices in R can be joined together using various functions. The most straightforward methods are rbind() for row-wise binding and cbind() for column-wise binding:

# Create matrices with identical column and row numbers

x <- matrix(data = 1:4, ncol = 2)

y <- matrix(data = 101:104, ncol = 2)

# Row-wise combination of matrices with same column number

rbind(x, y)## [,1] [,2]

## [1,] 1 3

## [2,] 2 4

## [3,] 101 103

## [4,] 102 104## [,1] [,2] [,3] [,4]

## [1,] 1 3 101 103

## [2,] 2 4 102 104Additionally, when combining multiple matrices, the Reduce() function can be quite handy:

# Create additional matrix with identical column number

z <- matrix(data = 201:206, ncol = 2)

# Use Reduce with rbind to combine multiple matrices row-wise

Reduce(f = rbind, x = list(x, y, z))## [,1] [,2]

## [1,] 1 3

## [2,] 2 4

## [3,] 101 103

## [4,] 102 104

## [5,] 201 204

## [6,] 202 205

## [7,] 203 206The Reduce() function, detailed in Chapter 3.5.10, is a prominent example of advanced higher-order functions. It facilitates consecutive operations over a list or vector. In the given context, it employs the rbind() function repeatedly to merge matrices. This technique becomes particularly beneficial when dealing with a variable or large number of matrices.

For example, to compute powers of matrices, you can use the Reduce() function to create a custom operation instead of relying on the %^% function from the expm package discussed in Chapter 4.3.7:

# Define a custom function for matrix exponentiation

`%**%` <- function(MAT, n)

Reduce(f = `%*%`, x = replicate(n = n, expr = MAT, simplify = FALSE))

# Demonstrate the sixth power of matrix x (contrast with element-wise exponentiation)

x%**%6

x^6## [,1] [,2]

## [1,] 5743 12555

## [2,] 8370 18298

## [,1] [,2]

## [1,] 1 729

## [2,] 64 4096In this revised version, the custom function %**% employs the Reduce() function to successively multiply the matrix with itself, achieving matrix exponentiation.

4.3.9 Apply Family

Apply functions are instrumental in conducting operations on matrices efficiently, offering an optimized alternative to loops (see Chapter 3.5.9). While the sapply function discussed in Chapter 3.5.9 applies a function to each element of a vector, the apply function is specialized for matrices and applies a function to each row or column of that matrix.

## [,1] [,2] [,3]

## [1,] 1 4 7

## [2,] 2 5 8

## [3,] 3 6 9## [1] 7 8 9# Calculating the sum of each column (using compact FUN notation)

apply(X = A, MARGIN = 2, FUN = max)## [1] 3 6 9The inputs for the apply function include:

X: Specifies the matrix or array.MARGIN: Designates 1 for operations across rows and 2 for columns.FUN: The function to be executed (examples includesum,mean).

For those seeking expedited calculations for specific functions like sum() or mean(), R offers faster alternatives such as rowSums(), colSums(), colMeans(), and rowMeans().

## [1] 12 15 18

## [1] 12 15 18## [1] 2 5 8

## [1] 2 5 8The sweep() function allows for efficient array manipulations, such as subtraction or division, across rows or columns using summary statistics. Specifically, it combines the capabilities of apply() and rep() to operate element-wise on a matrix. This is particularly useful when performing operations that require using a summary statistic—such as a mean—across all elements of a matrix. For example, one could use sweep() to demean a matrix by subtracting the mean of each column from every element in that respective column.

## [,1] [,2]

## [1,] 1 109

## [2,] 4 107

## [3,] 6 104

## [4,] 8 105## [1] 4.75 106.25# Use sweep to subtract each column's mean from each element in that column

sweep(x = B, MARGIN = 2, STATS = col_means, FUN = "-")## [,1] [,2]

## [1,] -3.75 2.75

## [2,] -0.75 0.75

## [3,] 1.25 -2.25

## [4,] 3.25 -1.25Manually achieving the same effect would involve a combination of apply(), rep(), and element-wise operations:

# Replicate the means to match the dimensions of the original matrix

(rep_means <- matrix(rep(col_means, each = nrow(B)), nrow = nrow(B)))## [,1] [,2]

## [1,] 4.75 106.25

## [2,] 4.75 106.25

## [3,] 4.75 106.25

## [4,] 4.75 106.25## [,1] [,2]

## [1,] -3.75 2.75

## [2,] -0.75 0.75

## [3,] 1.25 -2.25

## [4,] 3.25 -1.25In conclusion, matrices form an integral component of R, especially for multivariate analysis and linear algebra tasks. A comprehensive understanding of matrix operations in R can substantially elevate your data manipulation and analysis capabilities.

4.4 List (list)

A list (list) in R serve as an ordered collection of objects. In contrast to vectors, elements within a list are not required to be of the same type. Moreover, some list elements may store multiple sub-elements, allowing for complex nested structures. For instance, a single element of a list might itself be a matrix or another list.

4.4.1 Create a List

Lists can be formed using the list() function:

# Constructing a list

list_sample <- list(name = "John",

age = 25,

is_student = TRUE,

scores = c(88, 90, 78, 92),

grade = "B",

relationships = list(friends = c("Marc", "Victor", "Peter"),

parents = c("Valerie", "Bob"),

partner = NA))

print(list_sample)## $name

## [1] "John"

##

## $age

## [1] 25

##

## $is_student

## [1] TRUE

##

## $scores

## [1] 88 90 78 92

##

## $grade

## [1] "B"

##

## $relationships

## $relationships$friends

## [1] "Marc" "Victor" "Peter"

##

## $relationships$parents

## [1] "Valerie" "Bob"

##

## $relationships$partner

## [1] NAThe elements within the list (list_sample) encompass diverse data types: character strings (name and grade), numeric values (age), logical indicators (is_student), vectors (scores), and nested lists (relationships). This shows the versatility of lists in R, capable of storing various data types and structures.

You can also convert other objects (e.g., vectors) into lists using the as.list() function:

# Transform a numeric vector into a list

converted_list <- as.list(1:3)

# Revert the list back to a vector

unlist(converted_list) ## [1] 1 2 34.4.2 Inspect a List

To identify the type and components of a list, the following functions can be utilized:

# Identify the data structure of the list

class(list_sample) # Outputs "list".

is.list(list_sample) # Outputs TRUE.

# Identify the data type of each element within the list

sapply(list_sample, class)## [1] "list"

## [1] TRUE

## name age is_student scores grade

## "character" "numeric" "logical" "numeric" "character"

## relationships

## "list"To determine the total count of items in a list, employ the length() function:

## [1] 6To gain a comprehensive view of the list’s content and structure, the str() and summary() functions are valuable:

## List of 6

## $ name : chr "John"

## $ age : num 25

## $ is_student : logi TRUE

## $ scores : num [1:4] 88 90 78 92

## $ grade : chr "B"

## $ relationships:List of 3

## ..$ friends: chr [1:3] "Marc" "Victor" "Peter"

## ..$ parents: chr [1:2] "Valerie" "Bob"

## ..$ partner: logi NA## Length Class Mode

## name 1 -none- character

## age 1 -none- numeric

## is_student 1 -none- logical

## scores 4 -none- numeric

## grade 1 -none- character

## relationships 3 -none- list4.4.3 Select and Modify

You can select and modify specific elements using indexing:

## [1] 25## [1] 25## [1] 25## $name

## [1] "John"

##

## $is_student

## [1] TRUE## [1] "Marc"4.4.4 Add Labels

You can examine and modify the names of the list elements:

## [1] "name" "age" "is_student" "scores" "grade"

## [6] "address"4.4.5 Combine Lists

You can merge lists with the c() function:

# Create two sample lists

list_1 <- list(name = "Alice", age = 30, is_female = TRUE)

list_2 <- list(job = "Engineer", city = "New York")

# Merge the lists

merged_list <- c(list_1, list_2)

print(merged_list)## $name

## [1] "Alice"

##

## $age

## [1] 30

##

## $is_female

## [1] TRUE

##

## $job

## [1] "Engineer"

##

## $city

## [1] "New York"For more control over the merging position, use the append() function. It offers an after argument to specify where the second list should be inserted:

# Merge the lists, placing the second list after the second element

merged_at_position <- append(list_1, list_2, after = 2)

print(merged_at_position)## $name

## [1] "Alice"

##

## $age

## [1] 30

##

## $job

## [1] "Engineer"

##

## $city

## [1] "New York"

##

## $is_female

## [1] TRUEHere, the append() function inserts the elements of list_2 immediately after the second element of list_1.

To reverse the order of a list’s elements, apply the rev() function:

## $Address

## [1] "123 Main St"

##

## $Grade

## [1] "B"

##

## $Scores

## [1] 88 90 78 92

##

## $`Is Student`

## [1] TRUE

##

## $Age

## [1] 25

##

## $`Full Name`

## [1] "John Travolta"4.4.6 Apply Family

The apply functions are not limited to vectors and matrices; they can be applied to lists, offering a more concise and optimized alternative to loops. This section delves into the use of apply functions with lists.

lapply: The

lapply()(list-apply) function is tailored specifically for lists. It applies a given function to each element of a list and consistently returns results in list format.# Define a sample list of numeric vectors sample_list <- list( a = c(2, 4, 6), b = c(3, 6, 9, 12), c = c(4, 8) ) # Use lapply to calculate the mean of each list element lapply(X = sample_list, FUN = mean)## $a ## [1] 4 ## ## $b ## [1] 7.5 ## ## $c ## [1] 6sapply: The

sapply()function (simplify-apply) aims to simplify the output, defaulting to vectors whenever feasible. Thus, unlike thelapply()function, its return type is not always a list.# Compute the mean of each list element and return a vector if possible sapply(X = sample_list, FUN = mean)## a b c ## 4.0 7.5 6.0vapply: The

vapply()function (value-apply) mirrorssapply()but with an additional provision: you can designate the expected return type. This feature not only ensures consistent output format but can also boost speed in specific cases.# Compute the mean (numeric) of each list element vapply(X = sample_list, FUN = mean, FUN.VALUE = numeric(1))## a b c ## 4.0 7.5 6.0# Compute the mean (numeric) and type (character) of each list element vapply(X = sample_list, FUN = function(vec) list(mean_value = mean(vec), type = class(vec)), FUN.VALUE = list(mean_value = numeric(1), type = character(1)))## a b c ## mean_value 4 7.5 6 ## type "numeric" "numeric" "numeric"

In summary, lists are inherently flexible and, due to this characteristic, play a pivotal role in many R tasks, from data manipulation to functional programming.

4.5 Data Frame (data.frame)

A data frame (data.frame) in R resembles a matrix in its two-dimensional, rectangular structure. However, unlike a matrix, a data frame allows each column to contain a different data type. Therefore, within each column (or vector), the elements must be homogeneous, but different columns can accommodate distinct types. Typically, when importing data into R, the default object type used is a data frame.

4.5.1 Create a Data Frame

Data frames can be formed using the data.frame() function:

# Create vectors for the data frame

names <- c("Anna", "Ella", "Sophia")

ages <- c(23, NA, 26)

female <- c(TRUE, TRUE, TRUE)

grades <- factor(c("A", "B", "A"), levels = rev(LETTERS[1:6]), ordered = TRUE)

major <- c("Math", "Biology", "Physics")

# Construct the data frame

students_df <- data.frame(name = names,

age = ages,

female = female,

grade = grades,

major = major)

print(students_df)## name age female grade major

## 1 Anna 23 TRUE A Math

## 2 Ella NA TRUE B Biology

## 3 Sophia 26 TRUE A PhysicsIn the above code chunk, we observe that like a matrix, every column in a data frame possesses an identical length (3 rows). However, the first and fifth columns are composed of character data (name, major), while the second column comprises numeric data (age), the third column consists of logical values (female), and the fourth column is an ordered factor (grade). This capacity to host varied data types in separate columns is what sets data frames apart from matrices.

4.5.2 Inspect a Data Frame

To identify the type and components of a data frame, the following functions can be utilized:

# Identify the data structure of the data frame

class(students_df) # Outputs "data.frame".

is.data.frame(students_df) # Outputs TRUE## [1] "data.frame"

## [1] TRUE## $name

## [1] "character"

##

## $age

## [1] "numeric"

##

## $female

## [1] "logical"

##

## $grade

## [1] "ordered" "factor"

##

## $major

## [1] "character"Many matrix operations are compatible with data frames. For instance, to determine the dimensions of a data frame, the functions nrow(), ncol(), and dim() can be used, analogous to their applications with matrices discussed in Chapter 4.3:

## [1] 3## [1] 5## [1] 3 5The head() and tail() functions are employed to extract the first and last n rows of a data frame, respectively, where n is defaulted to 6. They are particularly beneficial when working with large data frames, as displaying the entirety in the R console becomes unwieldy.

## name age female grade major

## 1 Anna 23 TRUE A Math

## 2 Ella NA TRUE B Biology## name age female grade major

## 2 Ella NA TRUE B BiologyFor data frames with many columns, which can be cumbersome to display fully using head() or tail(), RStudio’s View() function is beneficial. It displays the data frame in a spreadsheet style. It’s worth noting that the View() function is exclusive to RStudio and is not a feature of base R.

Furthermore, the summary() and str() functions provide detailed insights into a data frame’s columns in terms of their composition and characteristics. These tools are essential for understanding facets of the data that might not be immediately apparent from a cursory glance.

## 'data.frame': 3 obs. of 5 variables:

## $ name : chr "Anna" "Ella" "Sophia"

## $ age : num 23 NA 26

## $ female: logi TRUE TRUE TRUE

## $ grade : Ord.factor w/ 6 levels "F"<"E"<"D"<"C"<..: 6 5 6

## $ major : chr "Math" "Biology" "Physics"## name age female grade major

## Length:3 Min. :23.00 Mode:logical F:0 Length:3

## Class :character 1st Qu.:23.75 TRUE:3 E:0 Class :character

## Mode :character Median :24.50 D:0 Mode :character

## Mean :24.50 C:0

## 3rd Qu.:25.25 B:1

## Max. :26.00 A:2

## NA's :14.5.3 Select and Modify

Elements within a data frame can be accessed, modified, or created using various indexing methods:

## [1] B

## Levels: F < E < D < C < B < A# Obtain an entire column by its name

students_df$major

# Another method for name-based column access

students_df[["major"]]

# Yet another method for name-based column access

students_df[, "major"]## [1] "Math" "Biology" "Physics"

## [1] "Math" "Biology" "Physics"

## [1] "Math" "Biology" "Physics"## major

## 1 Math

## 2 Biology

## 3 Physics# Access multiple columns by their names

students_df[, c("name", "grade")]

# Another method for name-based multiple column access

students_df[c("name", "grade")]## name grade

## 1 Anna A

## 2 Ella B

## 3 Sophia A

## name grade

## 1 Anna A

## 2 Ella B

## 3 Sophia A# Extract rows based on specific criteria

selected_rows = students_df$age > 24 & students_df$grade == "A"

students_df[selected_rows, ]## name age female grade major

## 3 Sophia 26 TRUE A Physics# Introduce a new column (ensuring the vector's length matches the number of rows)

students_df$gpa <- c(3.7, 3.4, 3.9)

print(students_df)## name age female grade major gpa

## 1 Anna 23 TRUE A Math 3.7

## 2 Ella NA TRUE B Biology 3.4

## 3 Sophia 26 TRUE A Physics 3.9## name age grade major gpa

## 1 Anna 23 A Math 3.7

## 2 Ella NA B Biology 3.4

## 3 Sophia 26 A Physics 3.9The with() Function

The with() function is an indirect function that simplifies operations on data frame columns by eliminating the need for constant data frame referencing.

For instance, when working with a data frame like students_df, the conventional way to concatenate columns name and major would require referencing the data frame explicitly using students_df$name and students_df$major:

# Traditional approach: operation with repeated data frame reference

paste0(students_df$name, " studies ", students_df$major, ".")## [1] "Anna studies Math." "Ella studies Biology."

## [3] "Sophia studies Physics."Using with(), you can perform the same operation without the repeated references:

# Using with(): operation without repeated data frame reference

with(students_df, paste0(name, " studies ", major, "."))## [1] "Anna studies Math." "Ella studies Biology."

## [3] "Sophia studies Physics."Here are some more illustrative examples:

# Traditional approach: operations with repeated data frame reference

sum(students_df$gpa >= 3.5) / length(students_df$gpa) * 100 # Cum Laude Share

students_df[students_df$age > 24 & students_df$grade == "A", ] # Select rows

# Using with(): operations without repeated data frame reference

with(students_df, sum(gpa >= 3.5) / length(gpa) * 100) # Cum Laude Share

students_df[with(students_df, age > 24 & grade == "A"), ] # Select rows## [1] 66.66667

## name age grade major gpa

## 3 Sophia 26 A Physics 3.9

## [1] 66.66667

## name age grade major gpa

## 3 Sophia 26 A Physics 3.9In summary, the with() function streamlines operations involving data frames by setting a temporary environment for computations. This can make the code more concise and reduce the likelihood of errors due to repeated data object references.

4.5.4 Add Labels

You can examine and modify the names of the data frame rows and columns:

## [1] "name" "age" "grade" "major" "gpa"## [1] "1" "2" "3"# Change column names

colnames(students_df) <- c("Name", "Age", "Grade", "Major", "GPA")

# Change the name of a specific column

colnames(students_df)[colnames(students_df) == "Major"] <- "Field of Study"

students_df## Name Age Grade Field of Study GPA

## 1 Anna 23 A Math 3.7

## 2 Ella NA B Biology 3.4

## 3 Sophia 26 A Physics 3.94.5.5 Handle Missing Values

Data frames in R can also contain missing values, represented by NA:

## Name Age Grade Field of Study GPA

## 0 1 0 0 0## Name Age Grade Field of Study GPA

## 1 Anna 23 A Math 3.7

## 3 Sophia 26 A Physics 3.9# Replace missing values in 'Age' column with the mean age

students_df$Age[is.na(students_df$Age)] <- mean(students_df$Age, na.rm = TRUE)

print(students_df)## Name Age Grade Field of Study GPA

## 1 Anna 23.0 A Math 3.7

## 2 Ella 24.5 B Biology 3.4

## 3 Sophia 26.0 A Physics 3.94.5.6 Combine Data Frames

When handling data in R, there are instances where combining data from different sources or merging tables is necessary. In this context, functions like merge(), rbind(), and cbind() are invaluable. Let’s explore these functions with examples:

rbind(): This function allows you to concatenate data frames vertically, stacking one on top of the other. It is essential that the columns of both data frames match in both name and order:# Create data frames with identical column names (students_2022 <- data.frame(Name = c("Alice", "Bob"), Age = c(21, 22))) (students_2023 <- data.frame(Name = c("Charlie", "David"), Age = c(23, 24)))## Name Age ## 1 Alice 21 ## 2 Bob 22 ## Name Age ## 1 Charlie 23 ## 2 David 24## Name Age ## 1 Alice 21 ## 2 Bob 22 ## 3 Charlie 23 ## 4 David 24cbind(): This function combines data frames horizontally, side by side. It’s essential the data frames have the same number of rows, and the rows must have the same order. In the provided example, the first score of 91.1 corresponds with “Alice” from the first row, while the second score of 85.3 aligns with “Bob” from the second row:# Create a data frame with the same number of rows (scores_2022 <- data.frame(Score = c(91.1, 85.3)))## Score ## 1 91.1 ## 2 85.3## Name Age Score ## 1 Alice 21 91.1 ## 2 Bob 22 85.3merge(): Themerge()function comes into play when data frames don’t necessarily have rows in the same order, but possess a shared identifier (such as “Name”). This function aligns the data frames by the shared identifier, determined using thebyargument (such asby = "Name"):# Create a data frame with a common column "Name" (scores_2022 <- data.frame(Name = c("Bob", "Alice"), Score = c(85.3, 91.1)))## Name Score ## 1 Bob 85.3 ## 2 Alice 91.1## Name Age Score ## 1 Alice 21 91.1 ## 2 Bob 22 85.3merge()with Different Merge Types: Sometimes, two data frames intended for merging may have varying row counts. This can occur because one data frame contains extra entries not found in the other. In these cases, you can specify the type of merge to perform:- Inner Merge (

all = FALSE): Retains only the entries found in both data frames. - Outer Merge (

all = TRUE): Retains all unique entries from both data frames. - Left Merge (

all.x = TRUE): Keeps all entries from the first data frame, regardless of whether they have a match in the second data frame. - Right Merge (

all.y = TRUE): Keeps all entries from the second data frame.

If an entry is present in one data frame but absent in the other, the missing columns for that entry are populated with

NAvalues:# Construct a data frame with a partially shared "Name" column (scores_2022 <- data.frame(Name = c("Bob", "Eva"), Score = c(85.3, 78.3)))## Name Score ## 1 Bob 85.3 ## 2 Eva 78.3# Inner-merge retaining common 'Name' entries (benchmark) merge(students_2022, scores_2022, by = "Name", all = FALSE)## Name Age Score ## 1 Bob 22 85.3# Outer-merge retaining all unique 'Name' entries from both data frames merge(students_2022, scores_2022, by = "Name", all = TRUE)## Name Age Score ## 1 Alice 21 NA ## 2 Bob 22 85.3 ## 3 Eva NA 78.3# Left-merge to keep all rows from 'students_2022' merge(students_2022, scores_2022, by = "Name", all.x = TRUE)## Name Age Score ## 1 Alice 21 NA ## 2 Bob 22 85.3# Right-merge to retain all rows from 'scores_2022' merge(students_2022, scores_2022, by = "Name", all.y = TRUE)## Name Age Score ## 1 Bob 22 85.3 ## 2 Eva NA 78.3- Inner Merge (

do.call()+rbind(),cbind(), ormerge(): When you use the indirect functiondo.call()in combination with functions likerbind(),cbind(), ormerge()and supply a list of data frames, it effectively binds them together.For example, if you have a list of data frames and you want to bind them all together into one data frame by stacking them vertically:

# Create data frames for different months jan <- data.frame(Name = c("Eve", "Frank"), Age = c(25, 26)) feb <- data.frame(Name = c("Grace", "Harry"), Age = c(27, 28)) mar <- data.frame(Name = c("Irene"), Age = c(29)) # Save all data frames in a list list_of_dfs <- list(jan, feb, mar) # Combine all data frames by rows (combined_df <- do.call(rbind, list_of_dfs))## Name Age ## 1 Eve 25 ## 2 Frank 26 ## 3 Grace 27 ## 4 Harry 28 ## 5 Irene 29What

do.call()does here is similar to iteratively binding each data frame in the list. The resultingcombined_dfstacksjan,feb, andmaron top of each other. The advantage of utilizingdo.call(rbind, list_of_dfs)over the directrbind(jan, feb, mar)approach is its flexibility: it can merge any number of data frames within a list without needing to know their individual names or the total count. This makesdo.call()particularly valuable when the number of data frames is unpredictable, a scenario frequently encountered in data repositories. In such repositories, data might be segmented into separate data frames for each year. Using the conventionalrbind()method would necessitate yearly adjustments to accommodate the varying number of data frames. In contrast, thedo.call()method operates smoothly without such annual adjustments.

When combining multiple data frames, always check for consistency in column names and data types to prevent unexpected results.

4.5.7 Apply Family

In R, the apply functions execute repeated operations without the need for writing explicit loops. While initially tailored for matrices, they also offer great utility with data frames. This chapter elucidates the application of the apply family on data frames.

Apply by Columns and Rows

apply(): While initially intended for matrices,apply()can be used with data frames, treating them as lists of columns. Its core function is to process a function over rows or columns:# Sample data frame df <- data.frame(a = 1:4, b = 5:8) # Mean values across columns (resultant is a vector) apply(X = df, MARGIN = 2, FUN = mean)## a b ## 2.5 6.5lapply(): Primarily for lists,lapply()works seamlessly with data frames, which are inherently lists of vectors (columns). It processes a function over each column, producing a list:## $a ## [1] 2.5 ## ## $b ## [1] 6.5sapply(): A more concise form oflapply(),sapply()tries to yield a simplified output, outputting vectors, matrices, or lists based on the scenario:## a b ## 2.5 6.5vapply(): Resemblingsapply(), withvapply()you declare the return value’s type, ensuring a uniform output:# Derive mean for each column while stating the output type vapply(X = df, FUN = mean, FUN.VALUE = numeric(1))## a b ## 2.5 6.5mapply(): This is the “multivariate” version of apply. It applies a function across multiple input lists on an element-to-element basis:# Sample data sets df <- data.frame(x = 1:3, y = 4:6, z = 9:11) # Apply function with(df, mapply(FUN = function(x, y, z) (y - z)^x, x, y, z))## [1] -5 25 -125

Apply by Groups

When dealing with grouped or categorized data, the goal often becomes performing operations within these distinct groups rather than across entire rows or columns. Typically, a specific column in the data frame denotes these groups, categorizing each data point. R provides several functions, part of the apply family and beyond, that allow for such “group-wise” computation.

split(): Before diving into the apply-by-group functions, understandingsplit()is crucial. It partitions a data frame based on the levels of a factor, producing a list of data frames. This facilitates the application of functions to each subset individually.# Sample grouped data frame df_grouped <- data.frame(group = c("A", "A", "B", "B", "B"), value = c(10, 20, 30, 40, 50)) print(df_grouped)## group value ## 1 A 10 ## 2 A 20 ## 3 B 30 ## 4 B 40 ## 5 B 50# Splitting the data frame by 'group' split_data <- split(x = df_grouped, f = df_grouped$group) print(split_data)## $A ## group value ## 1 A 10 ## 2 A 20 ## ## $B ## group value ## 3 B 30 ## 4 B 40 ## 5 B 50Split-Apply-Combine strategy with

split()+lapply()+do.call()+c(): The split-apply-combine strategy is foundational in R. First, data is split into subsets based on some criteria (often a factor). Next, a function is applied to each subset independently. Finally, results are combined back into a useful data structure.# Split: Dividing the data based on 'group' (split_data <- split(x = df_grouped$value, f = df_grouped$group))## $A ## [1] 10 20 ## ## $B ## [1] 30 40 50# Apply: Summing the 'value' within each split group (applied_data <- lapply(X = split_data, FUN = sum))## $A ## [1] 30 ## ## $B ## [1] 120# Combine: combines results into a named vector (combined_data <- do.call(what = c, args = applied_data))## A B ## 30 120tapply(): Thetapply()function stands for “table-apply”. It quickly implements the split-apply-combine approach, using itsINDEXparameter to define the grouping.# Summing 'value' based on 'group' tapply(X = df_grouped$value, INDEX = df_grouped$group, FUN = sum)## A B ## 30 120aggregate(): Theaggregate()function employs the split-apply-combine approach, returning a data frame that combines group names with computed statistics for each group.# Using aggregate() to compute the sum for each group df_agg <- aggregate(x = value ~ group, data = df_grouped, FUN = sum) # Alternative: Using aggregate() with lists df_agg <- aggregate(x = list(value = df_grouped$value), by = list(group = df_grouped$group), FUN = sum) print(df_agg)## group value ## 1 A 30 ## 2 B 120- The formula

x = value ~ groupindicates that the function should summarize thevaluevariable based on thegroupvariable. - The

data = df_groupedargument specifies the dataset to use. - The

FUN = sumargument tells the function to compute the sum for each group. - Alternatively, using lists: the

xargument takes a list that specifies the variables to be aggregated, and thebyargument provides a list that determines how the dataset is grouped.

The result is a summary data frame where each unique level of the

groupvariable has a corresponding sum of thevaluevariable.- The formula

aggregate()with multiple variables: Theaggregate()function can handle scenarios that involve multiple group and value variables. When you have a dataset with more than one group and value column, you can use theaggregate()function to group by multiple columns and compute summaries over several value columns simultaneously.# Sample dataset with multiple groups and values df_advanced <- data.frame( group1 = c("A", "A", "A", "B", "B", "B"), group2 = c("X", "X", "Y", "X", "Y", "Y"), v1 = c(10, 20, 30, 40, 50, 60), v2 = c(5, 10, 15, 20, 25, 30) ) # Using aggregate() to compute the mean for each group1 & group2 combination df_agg <- aggregate(x = cbind(v1, v2) ~ group1 + group2, data = df_advanced, FUN = mean) # Alternative: Using aggregate() with lists df_agg <- aggregate(x = df_advanced[c("v1", "v2")], by = df_advanced[c("group1", "group2")], FUN = sum) print(df_agg)## group1 group2 v1 v2 ## 1 A X 30 15 ## 2 B X 40 20 ## 3 A Y 30 15 ## 4 B Y 110 55- The formula

cbind(v1, v2) ~ group1 + group2instructs R to group by bothgroup1andgroup2, and then summarize bothv1andv2. - The

data = df_advancedargument specifies the dataset to use. - The

FUN = meantells the function to calculate the mean for each grouped set of data. - In the list-based approach, the

xargument provides the data columns to aggregate, while thebyargument specifies the grouping columns.

The resulting output showcases the average values for

v1andv2for each unique combination ofgroup1andgroup2. This approach offers a concise way to produce summaries for complex datasets with multiple grouping variables.- The formula

In essence, group-wise computation is a cornerstone in many analyses. Knowing how to efficiently split, process, and combine data is pivotal. Functions in R, especially within the apply family, provide the tools to handle such computations with ease, making the data analysis process streamlined and robust.

4.5.8 Reshape Data Frames

Working with different data formats is essential for a seamless data analysis experience. In R, the two primary data structures are the wide and long formats:

Wide Format: In this configuration, each row corresponds to a unique observation, with all its associated measurements or characteristics spread across distinct columns. To illustrate, consider an individual named John. In the wide format, John would occupy a single row. Attributes such as age, gender, and IQ would each have their own columns. For John, these might be represented as separate columns with values 38, Male, and 120, respectively.

Table 4.1: Wide Format Example

Name

Age

Gender

IQ

John

38

Male

120

Marie

29

Female

121

Long Format: Contrary to the wide format, in the long format, each row stands for just one characteristic or measurement of an observation. As a result, a single entity might be represented across several rows. Using John as an example again, he would be spread across three rows, one for each attribute. The dataset would typically have three columns: one indicating the individual (John, John, John), one specifying the type of attribute (age, gender, IQ), and the last one containing the corresponding values (38, Male, 120).

Table 4.2: Long Format Example

Name

Attribute

Value

John

Age

38

John

Gender

Male

John

IQ

120

Marie

Age

29

Marie

Gender

Female

Marie

IQ

121

The reshape() function offers a robust method to toggle between these two formats.

# Sample data in wide format

wide_data <- data.frame(

name = c("John", "Marie"),

age = c(38, 29),

gender = c("Male", "Female"),

iq = c(120, 121)

)

print(wide_data)## name age gender iq

## 1 John 38 Male 120

## 2 Marie 29 Female 121# Convert to long format

long_data <- reshape(

data = wide_data,

direction = "long",

varying = list(attribute = c("age", "gender", "iq")),

times = c("Age", "Gender", "IQ"),

timevar = "attribute",

v.names = "value",

idvar = "name"

)

rownames(long_data) <- NULL

print(long_data)## name attribute value

## 1 John Age 38

## 2 Marie Age 29

## 3 John Gender Male

## 4 Marie Gender Female

## 5 John IQ 120

## 6 Marie IQ 121# Convert long format back to wide format

reshaped_wide_data <- reshape(

data = long_data,

direction = "wide",

timevar = "attribute",

v.names = "value",

idvar = "name",

sep = "_"

)

print(reshaped_wide_data)## name value_Age value_Gender value_IQ

## 1 John 38 Male 120

## 2 Marie 29 Female 121In the reshape function:

data: This specifies the data frame you intend to reshape.direction: Determines if you’re going from ‘wide’ to ‘long’ format or vice-versa.varying: Lists columns that you’ll be reshaping.times: This denotes unique times or measurements in the reshaped data.timevar: This names the column in the reshaped data that will contain the uniquetimesidentifiers.v.names: The name of the column in the reshaped data that will contain the data values.idvar: Specifies the identifier variable, which will remain the same between reshaped versions.sep: Used in converting from long to wide format, it defines the separator between the identifier and the measurement variables.

For beginners, the reshape() function might seem intricate due to its numerous parameters. Yet, with practice, it becomes a valuable tool in a data scientist’s toolkit. Always refer to R’s built-in documentation with ?reshape for additional details, or read the vignette available through vignette("reshape").

4.6 Tibble (tbl_df)

A tibble (tbl_df) refines the conventional data frame, offering a more user-friendly alternative. It is part of the tibble package by Müller and Wickham (2023), which is in the tidyverse collection of R packages (Wickham 2023c). For an introduction into the Tidyverse, consult Chapter 3.6.7.

To use tibbles, you need to install the tibble package by executing install.packages("tibble") in your console. Don’t forget to include library("tibble") at the beginning of your R script. If you’re already using the Tidyverse suite, a simple library("tidyverse") will suffice, as it internally loads the tibble package.

Despite their modern touch, tibbles remain data frames at their core. This duality is evident when the class() function, applied to a tibble, returns both "tbl_df" and "data.frame". Therefore, the operations and functions elucidated in the data frame section @ref(data.frame) are entirely compatible with tibbles.

Building on this compatibility, this chapter explores the Tidyverse way of manipulating tibbles, leveraging user-friendly functions from the dplyr package by Wickham et al. (2023), rather than solely relying on base functions. The dplyr package is one of the core packages of the tidyverse collection and specializes in data manipulation. The package offers a series of verbs (functions) for the most common data manipulation tasks. Let’s explore some of these functions and see how they can be applied to tibbles.

4.6.1 Create a Tibble

Tibbles can be formed using the tibble() function from the tibble package:

# Load tibble package

library("tibble")

# Create vectors for the tibble

names <- c("Anna", "Ella", "Sophia")

ages <- c(23, NA, 26)

female <- c(TRUE, TRUE, TRUE)

grades <- factor(c("A", "B", "A"), levels = rev(LETTERS[1:6]), ordered = TRUE)

major <- c("Math", "Biology", "Physics")

# Construct the tibble

students_tbl <- tibble(name = names,

age = ages,

female = female,

grade = grades,

major = major)

print(students_tbl)## # A tibble: 3 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Anna 23 TRUE A Math

## 2 Ella NA TRUE B Biology

## 3 Sophia 26 TRUE A PhysicsMoreover, tibbles support incremental construction by referencing a previously established tibble and appending additional columns:

# Initiate the tibble

students_tbl <- tibble(name = names,

age = ages,

female = female)

# Expand the tibble by adding columns

students_tbl <- tibble(students_tbl,

grade = grades,

major = major)

print(students_tbl)## # A tibble: 3 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Anna 23 TRUE A Math

## 2 Ella NA TRUE B Biology

## 3 Sophia 26 TRUE A PhysicsUnlike regular data frames, tibbles allow non-standard column names. You can use special characters or numbers as column names. Here’s an example:

# Construct a tibble with unconventional column names

tibble(`:)` = "smile",

` ` = "space",

`2000` = "number")## # A tibble: 1 × 3

## `:)` ` ` `2000`

## <chr> <chr> <chr>

## 1 smile space numberAnother way to create a tibble is with the tribble() function. It allows you to define column headings using formulas starting with ~ and separate entries with commas. Here’s an example:

## # A tibble: 2 × 3

## x y z

## <chr> <dbl> <dbl>

## 1 a 2 3.6

## 2 b 1 8.54.6.2 Inspect a Tibble

To discern the type and components of a tibble, the following functions can be employed:

# Identify the data structure of the tibble

class(students_tbl) # Outputs "tbl_df" "tbl" "data.frame".

is_tibble(students_tbl) # Outputs TRUE.

is.data.frame(students_tbl) # Outputs TRUE.

is.matrix(students_tbl) # Outputs FALSE.## [1] "tbl_df" "tbl" "data.frame"

## [1] TRUE

## [1] TRUE

## [1] FALSE## $name

## [1] "character"

##

## $age

## [1] "numeric"

##

## $female

## [1] "logical"

##

## $grade

## [1] "ordered" "factor"

##

## $major

## [1] "character"Inspecting tibbles can be achieved using functions like nrow(), ncol(), dim(), head(), tail(), str(), summarize(), and View(), much like their use for data frames as outlined in Chapter 4.5.2. However, when printing a tibble in R, the default behavior differs from that of a data frame. Only the first 10 rows and the columns that fit on the screen are displayed, accompanied by a message noting the additional rows and columns present. This makes the head() and tail() functions less necessary, as printing a tibble already provides a concise overview.

For a more concise structural overview of a tibble, instead of using str(), one might prefer the glimpse() function from the tibble package:

## Rows: 3

## Columns: 5

## $ name <chr> "Anna", "Ella", "Sophia"

## $ age <dbl> 23, NA, 26

## $ female <lgl> TRUE, TRUE, TRUE

## $ grade <ord> A, B, A

## $ major <chr> "Math", "Biology", "Physics"4.6.3 Select and Modify

The dplyr package (Wickham et al. 2023) from the Tidyverse provides an array of functions tailored for data manipulation with tibbles.

Select and Order Columns

Using select() to retrieve specific columns:

## # A tibble: 3 × 2

## name grade

## <chr> <ord>

## 1 Anna A

## 2 Ella B

## 3 Sophia A## # A tibble: 3 × 2

## name grade

## <chr> <ord>

## 1 Anna A

## 2 Ella B

## 3 Sophia AUsing select() to order columns alphabetically:

## # A tibble: 3 × 5

## age female grade major name

## <dbl> <lgl> <ord> <chr> <chr>

## 1 23 TRUE A Math Anna

## 2 NA TRUE B Biology Ella

## 3 26 TRUE A Physics Sophia## # A tibble: 3 × 5

## age female grade major name

## <dbl> <lgl> <ord> <chr> <chr>

## 1 23 TRUE A Math Anna

## 2 NA TRUE B Biology Ella

## 3 26 TRUE A Physics SophiaFilter and Order Rows

Use filter() to extract rows based on conditions:

## # A tibble: 1 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Sophia 26 TRUE A Physics## # A tibble: 1 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Sophia 26 TRUE A PhysicsThe rows can be ordered with the arrange() function:

## # A tibble: 3 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Sophia 26 TRUE A Physics

## 2 Anna 23 TRUE A Math

## 3 Ella NA TRUE B Biology## # A tibble: 3 × 5

## name age female grade major

## <chr> <dbl> <lgl> <ord> <chr>

## 1 Sophia 26 TRUE A Physics

## 2 Anna 23 TRUE A Math

## 3 Ella NA TRUE B BiologyIn the dplyr approach, the desc() function is utilized to order values in descending order.

Add and Remove Columns

To create a new column, employ mutate():

# Base R method

students_tbl$gpa <- c(3.7, 3.4, 3.9)

# With dplyr

students_tbl <- mutate(students_tbl, gpa = c(3.7, 3.4, 3.9))

print(students_tbl)## # A tibble: 3 × 6

## name age female grade major gpa

## <chr> <dbl> <lgl> <ord> <chr> <dbl>

## 1 Anna 23 TRUE A Math 3.7

## 2 Ella NA TRUE B Biology 3.4

## 3 Sophia 26 TRUE A Physics 3.9Columns can be removed using select():

# Base R method

students_tbl$female <- NULL

# With dplyr

students_tbl <- select(students_tbl, -female)

print(students_tbl)## # A tibble: 3 × 5

## name age grade major gpa

## <chr> <dbl> <ord> <chr> <dbl>

## 1 Anna 23 A Math 3.7

## 2 Ella NA B Biology 3.4

## 3 Sophia 26 A Physics 3.9Manipulate Columns

To construct new values for each row based on existing columns, you can utilize the reframe() function:

## [1] "Anna studies Math." "Ella studies Biology."

## [3] "Sophia studies Physics."## # A tibble: 3 × 1

## `paste0(name, " studies ", major, ".")`

## <chr>

## 1 Anna studies Math.

## 2 Ella studies Biology.

## 3 Sophia studies Physics.The key difference between mutate() and reframe() is that mutate() returns the full tibble, while reframe() only returns the newly computed column:

# Using mutate() for comparison

mutate(students_tbl, new_description = paste0(name, " studies ", major, "."))## # A tibble: 3 × 6

## name age grade major gpa new_description

## <chr> <dbl> <ord> <chr> <dbl> <chr>

## 1 Anna 23 A Math 3.7 Anna studies Math.

## 2 Ella NA B Biology 3.4 Ella studies Biology.

## 3 Sophia 26 A Physics 3.9 Sophia studies Physics.To compute summary statistics over all rows, the summarize() or summarise() function can be employed:

## [1] 3.666667## # A tibble: 1 × 1

## `mean(gpa)`

## <dbl>

## 1 3.67The difference between mutate() and summarize() is that mutate() keeps the tibble format, thus, in this case, mutate() creates a new column with the average values repeated multiple times:

## # A tibble: 3 × 6

## name age grade major gpa mean_gpa

## <chr> <dbl> <ord> <chr> <dbl> <dbl>

## 1 Anna 23 A Math 3.7 3.67

## 2 Ella NA B Biology 3.4 3.67

## 3 Sophia 26 A Physics 3.9 3.67Note that while base R returns a scalar, reframe() and summarize() keep the tibble structure. To extract just the value, use the pull() function:

## [1] 3.666667Use the Pipe Operator

The Tidyverse introduces the pipe operator, %>%. This operator, detailed in Chapter 3.6.7, allows for sequential execution of functions:

## # A tibble: 3 × 4

## name major gpa is_cum_laude

## <chr> <chr> <dbl> <lgl>

## 1 Anna Math 3.7 TRUE

## 2 Ella Biology 3.4 FALSE

## 3 Sophia Physics 3.9 TRUE# Employ the pipe operator

students_tbl %>%

select(-age, -grade) %>%

mutate(is_cum_laude = gpa >= 3.5)## # A tibble: 3 × 4

## name major gpa is_cum_laude

## <chr> <chr> <dbl> <lgl>

## 1 Anna Math 3.7 TRUE

## 2 Ella Biology 3.4 FALSE

## 3 Sophia Physics 3.9 TRUEWith the pipe operator, operations become more readable and straightforward, promoting cleaner code structuring.

4.6.4 Add Labels

To modify the names of the columns in a tibble, you can use the rename() function from the dplyr package:

# Base R method

colnames(students_tbl) <- c("Name", "Age", "Grade", "Major", "GPA")

# With dplyr

students_tbl <- students_tbl %>% rename(Name = name,

Age = age,

Grade = grade,

Major = major,

GPA = gpa)

print(students_tbl)## # A tibble: 3 × 5

## Name Age Grade Major GPA

## <chr> <dbl> <ord> <chr> <dbl>

## 1 Anna 23 A Math 3.7

## 2 Ella NA B Biology 3.4

## 3 Sophia 26 A Physics 3.9The rename() function is particularly useful when modifying only a subset of column:

# Base R method

colnames(students_tbl)[colnames(students_tbl) == "Major"] <- "Field of Study"

# With dplyr

students_tbl <- students_tbl %>% rename(`Field of Study` = Major)

print(students_tbl)## # A tibble: 3 × 5

## Name Age Grade `Field of Study` GPA

## <chr> <dbl> <ord> <chr> <dbl>

## 1 Anna 23 A Math 3.7

## 2 Ella NA B Biology 3.4

## 3 Sophia 26 A Physics 3.94.6.5 Handle Missing Values

Tibbles can contain missing values (NA) just like data frames. To manage these missing values, you can use the drop_na() function from the tidyr package by Wickham, Vaughan, and Girlich (2024), part of the Tidyverse.

# Load the tidyr package

library("tidyr")

# Remove all rows containing missing values

# --> Base R method

na.omit(students_tbl)

# --> With tidyr

students_tbl %>%

drop_na()## # A tibble: 2 × 5

## Name Age Grade `Field of Study` GPA

## <chr> <dbl> <ord> <chr> <dbl>

## 1 Anna 23 A Math 3.7

## 2 Sophia 26 A Physics 3.9The drop_na() function offers the flexibility to target specific columns for NA checking, e.g. drop_na(Age), ensuring that less critical columns with numerous NA values don’t substantially reduce the dataset. For more details, consult the function documentation by executing ?drop_na.

To replace missing values, you can use functions from the dplyr package:

# Replace missing 'Age' values with mean age

# --> Base R method

students_tbl$Age[is.na(students_tbl$Age)] <- mean(students_tbl$Age, na.rm = TRUE)

# --> With dplyr

students_tbl <- students_tbl %>%

mutate(mean_age = mean(students_tbl$Age, na.rm = TRUE)) %>%

mutate(Age = ifelse(is.na(Age), mean_age, Age)) %>%

select(-mean_age)

print(students_tbl)## # A tibble: 3 × 5

## Name Age Grade `Field of Study` GPA

## <chr> <dbl> <ord> <chr> <dbl>

## 1 Anna 23 A Math 3.7

## 2 Ella 24.5 B Biology 3.4

## 3 Sophia 26 A Physics 3.9Here, the mutate() function replaces NA values in the ‘Age’ column with the mean age, calculated with na.rm = TRUE to ignore NAs in the calculation.

4.6.6 Combine Tibbles

When dealing with multiple tibbles, the Tidyverse provides powerful tools for combination and merging. Specifically, the dplyr package by Wickham et al. (2023) offers functions such as bind_rows(), bind_cols(), and various *_join() methods like inner_join(). Additionally, the purrr package by Wickham and Henry (2023) introduces the map_*() functions, including map_dfr(). Here’s how they work:

bind_rows(): This function is the Tidyverse equivalent ofrbind()and stacks tibbles vertically. It requires that the columns have matching names:# Create tibbles with identical column names (students_2022 <- tibble(Name = c("Alice", "Bob"), Age = c(21, 22))) (students_2023 <- tibble(Name = c("Charlie", "David"), Age = c(23, 24)))## # A tibble: 2 × 2 ## Name Age ## <chr> <dbl> ## 1 Alice 21 ## 2 Bob 22 ## # A tibble: 2 × 2 ## Name Age ## <chr> <dbl> ## 1 Charlie 23 ## 2 David 24## # A tibble: 4 × 2 ## Name Age ## <chr> <dbl> ## 1 Alice 21 ## 2 Bob 22 ## 3 Charlie 23 ## 4 David 24bind_cols(): This function is the Tidyverse equivalent ofcbind()and aligns tibbles horizontally. Ensure the tibbles have the same number of rows:## # A tibble: 2 × 1 ## Score ## <dbl> ## 1 91.1 ## 2 85.3## # A tibble: 2 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Alice 21 91.1 ## 2 Bob 22 85.3inner_join(): Similar tomerge(),inner_join()matches tibbles based on a shared identifier specified by thebyargument:# Create a tibble with a common column "Name" (scores_2022 <- tibble(Name = c("Bob", "Alice"), Score = c(85.3, 91.1)))## # A tibble: 2 × 2 ## Name Score ## <chr> <dbl> ## 1 Bob 85.3 ## 2 Alice 91.1## # A tibble: 2 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Alice 21 91.1 ## 2 Bob 22 85.3Different Types of Joins with

dplyr: Withdplyr, there are specialized functions for each type of merge/join operation, making it more intuitive:- Inner Join: Retained entries are only those found in both tibbles.

- Full (Outer) Join: Keeps all entries from both tibbles.

- Left Join: Keeps all entries from the first tibble.

- Right Join: Retains all entries from the second tibble.

When an entry exists in one tibble but not the other, the missing columns for that entry will be filled with

NAvalues:# Construct a tibble with a partially shared "Name" column (scores_2022 <- tibble(Name = c("Bob", "Eva"), Score = c(85.3, 78.3)))## # A tibble: 2 × 2 ## Name Score ## <chr> <dbl> ## 1 Bob 85.3 ## 2 Eva 78.3## # A tibble: 1 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Bob 22 85.3# Full (outer) join to retain all unique 'Name' entries from both tibbles full_join(students_2022, scores_2022, by = "Name")## # A tibble: 3 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Alice 21 NA ## 2 Bob 22 85.3 ## 3 Eva NA 78.3# Left join to keep all rows from 'students_2022' left_join(students_2022, scores_2022, by = "Name")## # A tibble: 2 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Alice 21 NA ## 2 Bob 22 85.3# Right join to keep all rows from 'scores_2022' right_join(students_2022, scores_2022, by = "Name")## # A tibble: 2 × 3 ## Name Age Score ## <chr> <dbl> <dbl> ## 1 Bob 22 85.3 ## 2 Eva NA 78.3map_dfr()andmap_dfc(): For combining multiple tibbles stored in a list, thepurrrpackage provides themap_dfr()andmap_dfc()functions, which are more flexible alternatives to usingdo.call()in combination withrbind(), orcbind():# Load purrr package library("purrr") # Create tibbles for different months jan <- tibble(Name = c("Eve", "Frank"), Age = c(25, 26)) feb <- tibble(Name = c("Grace", "Harry"), Age = c(27, 28)) mar <- tibble(Name = c("Irene"), Age = c(29)) # Store all tibbles in a list list_of_tibbles <- list(jan, feb, mar) # Combine all tibbles by rows (combined_tibble <- map_dfr(.x = list_of_tibbles, .f = ~.x))## # A tibble: 5 × 2 ## Name Age ## <chr> <dbl> ## 1 Eve 25 ## 2 Frank 26 ## 3 Grace 27 ## 4 Harry 28 ## 5 Irene 29With this approach,

map_dfr()sequentially combines each tibble in the list by rows. This method is particularly useful when the number of tibbles to be combined is unknown or variable, offering more flexibility than calling functions likebind_rows(jan, feb, mar)directly. Themap_dfc()function operates similarly but combines the tibbles column-wise instead of row-wise.Within the

map_*()functions, the argument.f = ~.xis a shorthand formula notation which translates to.f = function(x) x. This means that for each tibble in the list, the function takes the tibble as it is and returns it without any transformation, making it an efficient way to combine them row- or column-wise.reduce()+*_join(): When tasked with merging multiple tibbles stored in a list based on a common column, the combination ofpurrr::reduce()anddplyr::*_join()functions such asdplyr::full_join()offers a more flexible alternative to usingdo.call()in combination withmerge():# Construct a tibble with a partially shared "Name" column (subject_2022 <- tibble(Name = c("Bob", "Pete"), Subject = c("History", "Economics")))## # A tibble: 2 × 2 ## Name Subject ## <chr> <chr> ## 1 Bob History ## 2 Pete Economics# A list comprising the tibbles to be merged list_of_tibbles <- list(students_2022, scores_2022, subject_2022) # Execute an outer-merge using reduce() and full_join() (merged_tibble <- reduce(list_of_tibbles, .f = ~full_join(.x, .y, by = "Name")))## # A tibble: 4 × 4 ## Name Age Score Subject ## <chr> <dbl> <dbl> <chr> ## 1 Alice 21 NA <NA> ## 2 Bob 22 85.3 History ## 3 Eva NA 78.3 <NA> ## 4 Pete NA NA Economics

4.6.7 Apply Family

In R, the Tidyverse collection of packages offers functions for manipulating tibbles in a more readable and consistent manner. This chapter focuses on these functionalities that replace the traditional apply family used with data frames.

Apply by Columns and Rows

map(): Instead ofapply(), use thepurrr::map()function to process each column of a tibble:# Sample tibble tb <- tibble(a = 1:4, b = 5:8) # Mean values across columns (resultant is a list) map(tb, mean)## $a ## [1] 2.5 ## ## $b ## [1] 6.5map_dbl(): Similar tolapply(), thepurrr::map_dbl()function processes each column and returns a double vector:## a b ## 2.5 6.5

Apply by Groups

When dealing with grouped data, you can perform operations within these distinct groups using Tidyverse functions.

group_by()+summarize(): This pair of functions fromdplyrreplaces the need forsplit()andlapply():# Sample grouped tibble tb_grouped <- tibble(group = c("A", "A", "B", "B", "B"), value = c(10, 20, 30, 40, 50)) # Summarizing 'value' based on 'group' tb_grouped %>% group_by(group) %>% summarize(sum_value = sum(value))## # A tibble: 2 × 2 ## group sum_value ## <chr> <dbl> ## 1 A 30 ## 2 B 120group_by()+summarize()for Multiple Groups and Variables: Thedplyrfunctions can handle multiple group and value variables:# Sample tibble with multiple groups and values tb_advanced <- tibble( group1 = c("A", "A", "A", "B", "B", "B"), group2 = c("X", "X", "Y", "X", "Y", "Y"), v1 = c(10, 20, 30, 40, 50, 60), v2 = c(5, 10, 15, 20, 25, 30) ) # Summarize for each group1 & group2 combination tb_advanced %>% group_by(group1, group2) %>% summarize(mean_v1 = mean(v1), mean_v2 = mean(v2))## # A tibble: 4 × 4 ## # Groups: group1 [2] ## group1 group2 mean_v1 mean_v2 ## <chr> <chr> <dbl> <dbl> ## 1 A X 15 7.5 ## 2 A Y 30 15 ## 3 B X 40 20 ## 4 B Y 55 27.5

4.6.8 Reshape Tibbles

As discussed in Chapter 4.5.8, data can be organized in either a wide or long format:

Wide Format: The wide format has each row representing a unique observation, with various attributes detailed across columns.

Long Format: Contrary to the wide format, the long format has each row representing a single attribute or measure of an observation, resulting in multiple rows for each observation.

The tidyr package by Wickham, Vaughan, and Girlich (2024) provides two key functions for reshaping data: pivot_longer() and pivot_wider():

# Load tidyr package

library("tidyr")

# Create tibble using wide format

wide_data <- tibble(

Name = c("John", "Marie"),

Age = c(38, 29),

Gender = c("Male", "Female"),

IQ = c(120, 121)

)

print(wide_data)## # A tibble: 2 × 4

## Name Age Gender IQ

## <chr> <dbl> <chr> <dbl>

## 1 John 38 Male 120

## 2 Marie 29 Female 121# Coerce all varying attributes to character type

wide_data <- wide_data %>%

mutate(across(where(is.numeric), as.character))

# Pivot to long format

long_data <- wide_data %>%

pivot_longer(cols = -Name,

names_to = "Attribute",

values_to = "Value")

print(long_data)## # A tibble: 6 × 3

## Name Attribute Value

## <chr> <chr> <chr>

## 1 John Age 38

## 2 John Gender Male

## 3 John IQ 120

## 4 Marie Age 29

## 5 Marie Gender Female

## 6 Marie IQ 121# Convert long format back to wide format

reshaped_wide_data <- long_data %>%

pivot_wider(names_from = Attribute,

values_from = Value)

print(reshaped_wide_data)## # A tibble: 2 × 4

## Name Age Gender IQ

## <chr> <chr> <chr> <chr>

## 1 John 38 Male 120

## 2 Marie 29 Female 121In pivot_longer() and pivot_wider():

cols: Specifies the columns to pivot into longer or wider format.names_to: Names the column in the reshaped data containing the unique identifiers.values_to: Names the column in the reshaped data that will hold the data values.names_from: Indicates the column that will provide names in the widened data.values_from: Indicates the column that will provide values in the widened data.

For those new to tibbles and tidyverse, these functions may initially seem complicated. However, they offer a straightforward way to reshape data in R. You can always refer to the official documentation (?pivot_longer, ?pivot_wider) or read available vignettes for further insights.

4.7 Data Table (data.table)

A data table (data.table) is similar to a data frame but with more advanced features for data manipulation. Credited to the data.table package by Barrett et al. (2024), data tables are known for their high-speed operations, particularly beneficial for large datasets. This sets them apart from tibbles which, although more user-friendly, may not be as optimized for speed. Consequently, data.table and tibble can be seen as competitors, each improving upon the basic data frame in their unique ways. Like tibble, data.table is not a part of base R. It requires the installation of the data.table package via install.packages("data.table"), followed by library("data.table") at the beginning of your script.

The syntax of data.table differs significantly from the syntax used in the Tidyverse, making the R codes appear almost as distinct languages. While my research often employs data.table for its efficiency, in this book, I have chosen to emphasize tibble and the associated Tidyverse syntax. This decision is primarily due to the more intuitive and user-friendly nature of the Tidyverse.

For those who wish to explore the data.table package in more depth, I suggest starting with its vignette. You can access it in R using:

If you’re unsure about the specific vignettes available, list all vignettes associated with data.table:

Then, based on your interest, you can select a vignette and view it with the vignette() function, as shown earlier.

Moreover, for structured learning on data.table, DataCamp offers comprehensive courses including:

- Data Manipulation with data.table in R

- Joining Data with data.table in R

- Time Series with data.table in R

These courses provide an in-depth exploration, from basic operations to advanced manipulations using data.table.

4.8 Extensible Time Series (xts)

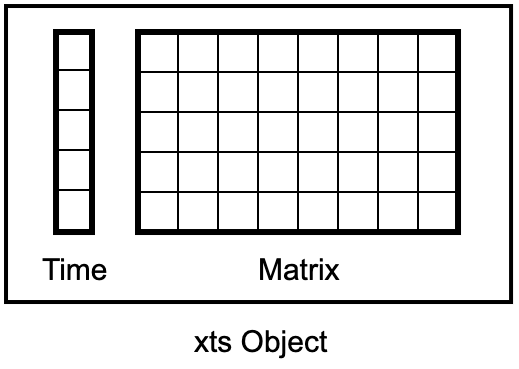

The extensible time series (xts) object of the xts package by Ryan and Ulrich (2024b) pairs a matrix with a time index:

Figure 4.1: xts Object as a Matrix with Time Index

In xts, each row of a matrix is marked with a unique timestamp, typically formatted as a Date or POSIXct. Details on creating Date or POSIXct time indices can be gathered from Chapter 3.3. It’s important to note that an xts object’s base is a matrix, not a data frame or tibble, which mandates that all columns share the same data type. This means an xts object contains exclusively numbers or characters but not a mix of the two. While most matrix-related functions from Chapter 4.3 are compatible with xts objects, xts offers an additional set of specialized functions tailored for handling time series data.

By design, xts objects always sort data chronologically, from the earliest to the latest observation. If you have use-cases that demand arranging time series data based on other criteria (e.g., size of stock returns), it’s necessary to first revert the xts object back to a matrix or a data frame.

xts objects are fundamentally based on zoo objects from the zoo package by Zeileis, Grothendieck, and Ryan (2023), named after Zeileis’ ordered observations. These, too, are time-indexed data structures, but the xts package introduces additional functionalities.

To integrate xts into your workflow, it needs to be installed first using install.packages("xts"). Subsequently, invoking library("xts") at the beginning of your script loads the package.

4.8.1 Create an xts Object

An xts object can be created using the xts() function. This function binds data with its respective time index (order.by = time_index):

# Load xts package

library("xts")

# Create a data matrix

data <- matrix(1:12, ncol = 2, dimnames = list(NULL, c("a", "b")))

print(data)## a b

## [1,] 1 7

## [2,] 2 8

## [3,] 3 9

## [4,] 4 10

## [5,] 5 11

## [6,] 6 12# Create a time index of the same length as the data matrix

time_index <- seq(as.Date("1995-11-01"), as.Date("1996-04-01"), by = "month")

print(time_index)## [1] "1995-11-01" "1995-12-01" "1996-01-01" "1996-02-01" "1996-03-01"

## [6] "1996-04-01"# Create an xts object based on the data matrix its time index

dxts <- xts(x = data, order.by = time_index)

print(dxts)## a b

## 1995-11-01 1 7

## 1995-12-01 2 8

## 1996-01-01 3 9

## 1996-02-01 4 10

## 1996-03-01 5 11

## 1996-04-01 6 124.8.2 Inspect an xts Object

Before delving into the specifics of an xts object, it’s helpful to grasp its overall structure and the type of time index it utilizes.

# Check data structure and time index type

class(dxts) # Returns "xts" and "zoo", but it's also a "matrix".

typeof(dxts) # Returns "integer".

tclass(dxts) # Returns "Date".

is.xts(dxts) # Returns TRUE.

is.zoo(dxts) # Returns TRUE.

is.matrix(dxts) # Returns TRUE.